I Tested Google’s Veo 3 Myself: Here’s What They Don’t Show You in the Keynote

My real-world test reveals the post-production work, environmental cost, and Valley hype behind the "effortless" videos

The last week brought a lot of big AI news, and one of the biggest was Google's new text-to-video model called Veo 3.

I take these video models personally since they're being sold as a way for us consumers to sidestep the work I do. Just pay these companies, type in a movie idea, and boom—instant cinema. Pop the popcorn and watch our own version of that animated feature I've been working on about Cleopatra's Cats.

(Don't steal my idea, y'all—or at least give me credit!)

So when the hype cycle began with Google's Veo 3, you knew I had to test it myself.

When Google unveiled Veo 3 at I/O 2025, the internet exploded with praise for what viewers couldn't distinguish from "human-made" content.

But this has been my life for over 20 years. I've been playing with these AI tools since they hit the mainstream, even did a bootcamp with Le Wagon to learn machine learning and Python. So I can spot the signs of post-production work hiding behind those 'AI-generated' showcase reels.

The pattern is predictable at this point - I'm old enough to remember when OpenAI's Sora "revolutionized" video generation in 2024.

Honestly, most were glorified montages.

Then reality set in. Sora's raw outputs, when they leaked, showed the familiar AI video problems: temporal inconsistencies, physics-defying nonsense, morphing objects, and the infamous "AI hands" that plagued earlier systems.

Now Google claims Veo 3 can generate flawless videos with synchronized audio from simple text prompts, and if you've been following along, you know exactly what I'm thinking: déjà vu.

Veo 3, like its predecessors, represents another chapter in Silicon Valley's favorite fairy tale: that human labor can be automated away, when the reality is far more complicated than these companies want to admit.

The Bottom Line Up Front:

After testing Veo 3 with multiple prompts, I got choppy footage with garbled audio that would need a full production team to become remotely usable—exactly the opposite of the "effortless AI generation" Google promises.

Testing Veo 3: The Reality Behind Google’s Polished Demos

Google's demos showcase Veo 3 generating videos that seem very passable in our modern times—humans not missing fingers or adding limbs, synchronized audio, and “cinematic” quality.

So obviously, I had to try it myself. You know me - I can't resist putting these tools through their paces.

My Real-World Test Results

I tested Veo 3 on May 27th, 2025. With the first test I pushed it hard on human emotion with a detailed cinematic prompt inspired by Spielberg's style: a soldier's emotional reunion with his young son, complete with specific requirements for golden hour lighting, intimate camera movement, and scripted dialogue.

Here is the prompt 👇

The Reality: After my one free attempt, Veo 3 produced only a brief, low-quality clip that I would not define as cinematic.

The audio? Random exclamations ("Oh!" "La!") instead of the requested dialogue. It also generated weird text artifacts at the bottom—a telltale sign of undertrained models that anyone who's worked with early AI video tools would recognize immediately.

Video quality? Poor, lacking the specified lighting, framing, or emotional depth.

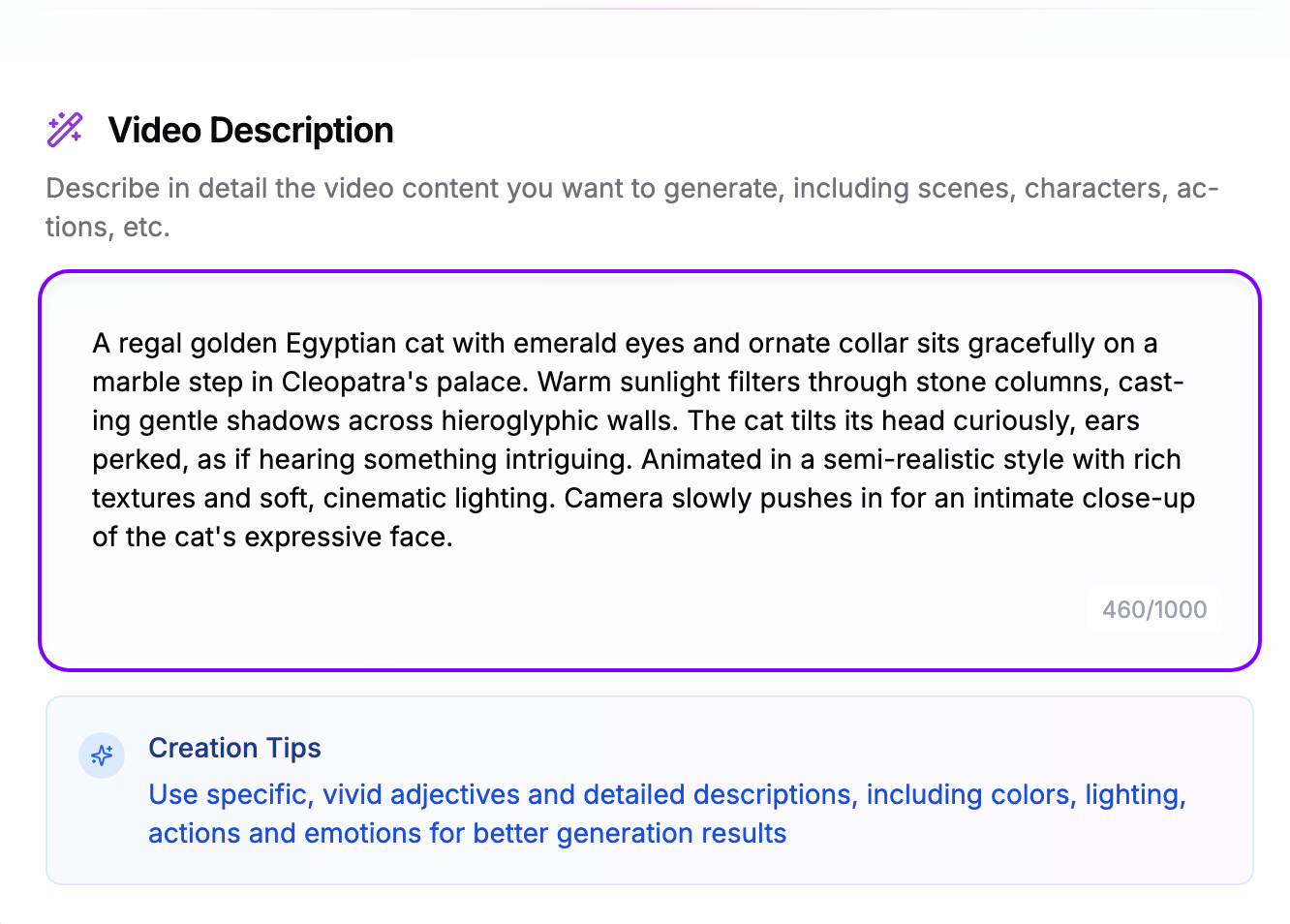

I switched Google accounts to try another free prompt with something I thought it would have better success with: my animated Cleopatra's Cats feature.

(Note: I'm not paying for more credits because Google gets enough from me—all my data and the cloud storage I pay for. As I've said before, stuff like this should be free since who knows what they trained this on.)

Here was my second prompt 👇

This five-second clip came out looking better, but you can tell there's distortion, and the cat sounds are totally random and sound like... well, cat poop.

By the Numbers:

2 free attempts (then $20/month for 163 prompts, $28/month for 488 prompts, or $72/month for 1,625 prompts). Here's the thing: the average feature film has around 1,250 shots—so even Google's top tier plan might barely cover one movie, assuming every single prompt worked perfectly (which, as we've seen, they don't)

Maybe 5 seconds of usable footage for Cleopatra's Cats: The Movie—but would require better sound design and would still come off as amateurish due to motion distortion

Hours of post-production work needed to make it remotely watchable

My conclusion: You're better off shooting with real humans. Cats, on the other hand...

Let's be honest—the first test I did produced garbage. The second one is better, but a far cry from what they were selling at the keynote.

Using only this tool to tell a story would require massive time, expertise, and energy consumption, just to create something barely watchable. And that's coming from someone who's salvaged plenty of bad footage in the edit.

The Post-Production Work Google Won’t Admit

Let's look at the showcase video Google presented. I can spot a lot of signs of extensive human intervention.

Here's the post-production work hidden behind those "effortless" results:

Color Grading and Visual Consistency: AI-generated clips often suffer from inconsistent lighting, color temperature shifts, and visual artifacts that require professional colorists and online editors to correct.

Audio Post-Production: While Veo 3 can generate sound, the quality clearly needs enhancement based on my tests—I couldn't get proper audio-to-video synchronization at all. No way did AI create the polished sound design in their demo videos without human intervention. I'm convinced they ran the dialogue through additional lip-sync software to match the mouth movements.

Composite Editing: The most impressive demos most likely combine multiple AI generations. Editors must still select the best segments from dozens of attempts, splicing them together to hide the AI's failures and amplify its successes.

Prompt Engineering: Achieving professional results with these models requires extensive experimentation with prompts. The lack of shot-to-shot continuity makes seamless editing nearly impossible. Teams often generate hundreds of variations before achieving usable footage.

What's marketed as "effortless AI generation" actually represents a hybrid workflow where the human eye and expertise remains essential—but for some reason hidden from public view.

From my point of view, if you want to make a full-length film that doesn't constantly remind viewers they're watching AI-generated content, every element—color, sound, edit timing—has to be seamless. Otherwise it becomes unwatchable.

For my soldier-returning-home prompt, I'd get better results shooting with real humans in my backyard. Even Cleopatra's Cats, which seemed more promising, would need massive post-production work.

If you think professional filmmaking is as simple as typing a few prompts, you're kidding yourself.

The Hidden Human Labor in “AI-Generated” Videos

Beyond the hidden human labor, there's an environmental issue that doesn't get discussed enough. These models burn through electricity faster than I burn through coffee trying to write coherent sentences about AI hype.

For every viral AI video, there are thousands of failed generations consuming power—essentially heating the planet to create content that would make a screensaver jealous.

The Hidden Energy Cost:

Each Veo 3 generation requires significant GPU processing power

Failed attempts (the majority) waste energy with no usable output

Professional projects might require 100+ attempts for a few shots

Training these models consumes energy equivalent to thousands of homes annually

Think about it this way: My first failed attempt consumed the same energy as the somewhat successful one. Scale that across millions of users generating billions of clips, and the carbon footprint becomes staggering—all for content that still needs human expertise to become usable.

Traditional video production, while resource-intensive, produces predictable results. AI generation creates environmental waste through computational inefficiency.

We've Seen This Movie Before

OpenAI's Sora launch in early 2024 followed a similar playbook. Initial demos showed breathtaking videos of woolly mammoths in snowy landscapes, bustling Tokyo street scenes, and surreal artistic visions that seemed impossible for AI to create.

Sora's announcement sparked widespread speculation about AI's role in filmmaking.

Media coverage often emphasized its potential to reduce production costs, while online forums did what online forums do—went into doomsday mode and debated whether human creativity could become obsolete.

Filmmaker Tyler Perry even paused a studio expansion after testing AI tools like Sora, stating, 'Jobs are going to be lost — this is a real danger.' (Sounds like a good excuse to get out of a deal to me.)

Though no major studios announced immediate layoffs, freelance editors and VFX artists reported growing anxiety about job security in online communities like Reddit's r/Filmmakers.

Follow the Money: Why AI Companies Oversell Automation

Here's something I've learned from being in and covering this industry: you have to follow the money to understand the AI hype cycle.

Tech companies don't just oversell AI capabilities to consumers—they weaponize these claims to drive investment rounds, IPO valuations, and stock prices.

Key Insight: The promise of "job replacement at scale" has become Silicon Valley's most potent current fundraising tool.

The Venture Capital Pressure Cooker

Consider the economic incentives at play:

Venture Capital Pressure: Investors demand exponential growth potential. A tool that "augments human creativity" doesn't justify billion-dollar valuations the way "replacing entire creative industries" does. Companies learn to pitch disruption, not collaboration.

The valuation gap tells the story perfectly. Adobe's $240 billion market cap, built over decades by creating tools that enhance human creativity, reveals a stark contrast to OpenAI's meteoric rise to a $90+ billion valuation in just a few years—despite generating a fraction of Adobe's actual revenue. The difference? Adobe sells creative empowerment; OpenAI sells creative replacement.

Photoshop, Premiere Pro, and Creative Suite transformed industries by making professional-grade tools accessible to millions of creators. The company's "creativity for all" messaging emphasizes human agency—AI features like Content-Aware Fill or auto-transcription are marketed as time-savers that free artists to focus on vision and storytelling.

OpenAI's astronomical valuation, by contrast, is built on the promise that human creativity isn’t necessary. Investors aren't paying $90 billion for a better Photoshop; they're betting on a future where Photoshop users and coders become obsolete.

This fundamental difference in philosophy drives everything from product development to marketing messaging.

The result is a crazy incentive structure where AI companies must continuously escalate their replacement rhetoric to justify ever-higher valuations, even when their technology works best as augmentation tools.

A startup promising to "help filmmakers work faster" might raise $10 million; one claiming to "eliminate human directors entirely" can command $100 million—regardless of whether the technology actually works.

What This Means for Your Career

Several of my readers work in creative industries, so if you're a creative professional reading this and you're as anxious as I am about the state of our creative labor, here's my advice:

Document your expertise: AI can't replicate the human judgment that goes into creative decisions. Make sure your value is visible.

Stay informed but skeptical: When executives come to you with AI tools, ask for proof-of-concept testing before any staffing decisions or implementing these tools.

Build alliances: The creative professionals I know who are thriving are those collaborating rather than competing with each other.

Building AI That Actually Helps Humans

The tragedy of current AI development isn't that the technology lacks potential—it's that this potential is being squandered in service of short-term profits rather than long-term human flourishing.

Veo 3 and similar tools could genuinely assist human creativity, reduce tedious work, and democratize creative production. But realizing this vision requires fundamental changes to how AI is developed, marketed, and deployed.

What Honest AI Development Could Look Like

Require Transparency in Marketing: Companies should disclose the human labor behind AI demonstrations. If a video needed 50 hours of post-production, say so.

Center Workers in Development: AI tools should be designed with input from affected workers. Focus on reducing repetitive tasks while preserving human agency and creativity.

Compensate Training Data Sources: Artists, writers, and creators whose work trains AI models deserve fair payment and consent.

Choose Evolution Over Revolution: Focus on tools that help humans work more efficiently rather than promising wholesale replacement of creative workers.

The Questions That Matter

The next time you see a breathtaking AI demo, ask yourself:

How many attempts did this take?

What human work happened after generation?

Who benefited from the hype, and who paid the price when reality didn't match the promises?

These aren't just technical questions — they're moral ones.

I think AI is fascinating and could help humanity solve real problems—hunger, environmental disaster, and work-life balance. This should lead to shorter work weeks and less stress about declining birth rates affecting Social Security. AI built on the backs of all human ingenuity should benefit everyone.

Veo 3 and other AI video software could become powerful creative tools. But first, we need to stop accepting Silicon Valley's automation theater and start building technology that serves human creativity rather than replacing it.

What I'm Curious About: Have you tested any AI tools recently? Did they live up to the hype, or did you find similar gaps between marketing and reality?

If this resonated with your experience in the industry, consider sharing it with a colleague who might benefit from a reality check on AI hype.

And if you're getting value from these deep dives into media and AI, consider upgrading to a paid subscription to support this independent analysis (and maybe help fund my Cleopatra's Cats feature—yes, I know I should have tested Veo 3 with THAT prompt first).

Next week: I'm going to be more uplifting and give you some recommendations for music, books, TV, and movies. So send me any recommendations I can try and review.

Until next time, keep the arts human.

Wesley

This is a newsletter I write weekly analyzing media, technology, and the intersection of human creativity with AI. Wesley Swinnen is a Grammy/Emmy-nominated editor/producer with over 20 years in television and audio production.